Problem: The Prisoner's Dilemma and Strategy Simulation

The Prisoner’s Dilemma is a classic example in game theory, demonstrating why two rational individuals might not cooperate, even if it appears to be in their best interest.

This example analyzes the payoff matrix and simulates multiple rounds of the dilemma using various strategies to observe long-term outcomes.

Objective

- Define the payoff matrix for the Prisoner’s Dilemma.

- Simulate repeated interactions using:

- Always Defect

- Always Cooperate

- Tit-for-Tat (mimic the opponent’s previous move).

- Visualize the accumulated payoffs over multiple rounds for each strategy.

Python Code

1 | import numpy as np |

Explanation of the Code

Payoff Matrix:

- Each cell in the matrix represents the payoff for Player $1$ and Player $2$, given their choices (cooperate/defect).

- Cooperation leads to mutual benefit, while defection may yield higher short-term rewards.

Strategies:

- Always Cooperate: Always chooses to cooperate.

- Always Defect: Always chooses to defect.

- Tit-for-Tat: Mimics the opponent’s previous move, encouraging reciprocity.

Simulation:

- The game is repeated for a fixed number of rounds.

- Accumulated scores and move histories are recorded for both players.

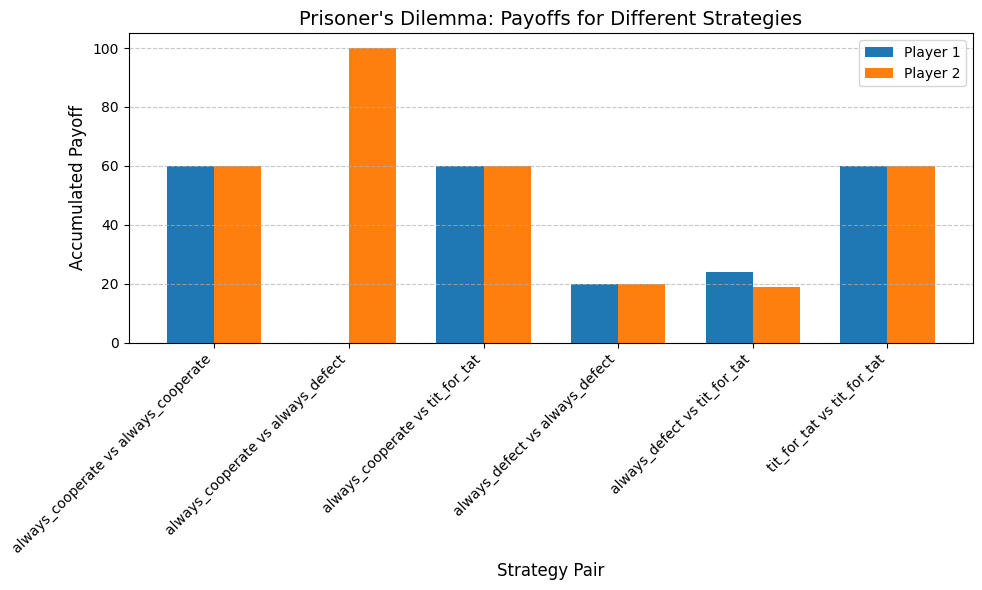

Visualization:

- Bar plots display the total payoffs for Player $1$ and Player $2$ across all strategy pairs.

- The results highlight how different strategies perform against each other.

Results and Insights

Tit-for-Tat Performance:

- Tit-for-Tat typically performs well, fostering cooperation when paired with cooperative strategies.

Always Defect:

- While it exploits cooperative players, it leads to mutual punishment when paired with itself or Tit-for-Tat.

Always Cooperate:

- Vulnerable to exploitation but performs well in fully cooperative scenarios.

This simulation illustrates the dynamics of strategic decision-making in repeated games, revealing insights into the stability and outcomes of different strategies.