Linear Transformations in Python

I’ll provide an example problem about linear transformations, solve it using Python, and visualize the results with graphs.

Here’s a comprehensive example:

Linear Transformation Example and Solution with Python

Let’s consider a linear transformation T:R2→R2 defined by the matrix:

T=(21 13)

We’ll solve the following problems:

- Find the transformed coordinates of several points

- Visualize how this transformation affects a unit square

- Compute the eigenvalues and eigenvectors of the transformation

- Visualize the effect of the transformation on the eigenvectors

1 | import numpy as np |

Explanation of the Solution

1. Defining the Linear Transformation

We define a linear transformation T using a 2×2 matrix:

T=(21 13)

This matrix represents the transformation, and we can apply it to any vector →v∈R2 by matrix multiplication: T→v.

2. Transforming Points

When we apply the transformation to specific points, we get:

- The point (1,0) transforms to (2,1)

- The point (0,1) transforms to (1,3)

- The point (1,1) transforms to (3,4)

- The point (−1,2) transforms to (1,5)

This demonstrates how the linear transformation maps points from the input space to the output space.

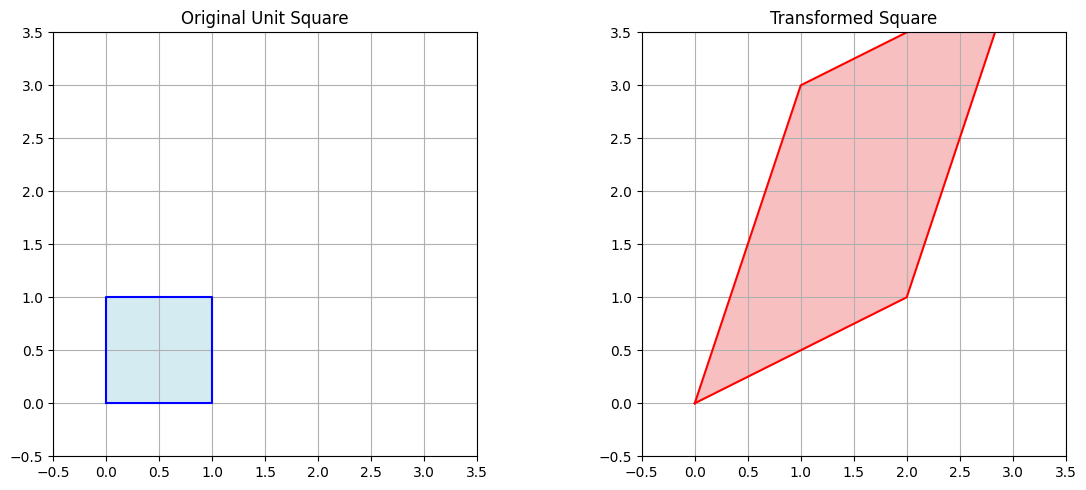

3. Visualization of the Transformation

Linear Transformation Matrix T: [[2 1] [1 3]] Original Points: [1, 0] [0, 1] [1, 1] [-1, 2] Transformed Points: [2, 1] [1, 3] [3, 4] [0, 5]

The code visualizes how the unit square (with vertices at (0,0), (1,0), (1,1), and (0,1)) is transformed by T.

As you can see in the first visualization, the square is stretched, rotated, and skewed by the transformation.

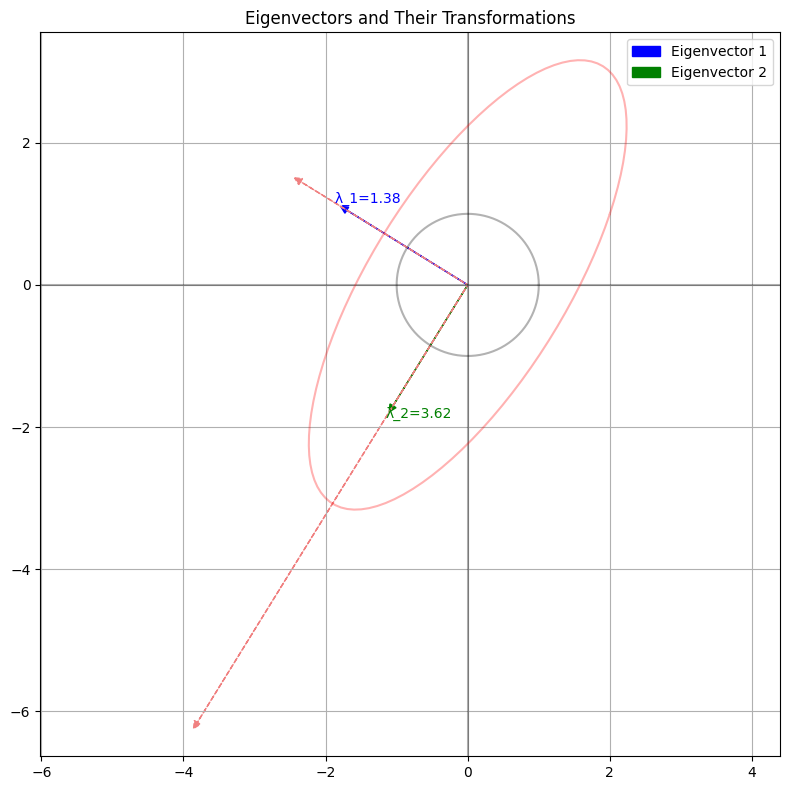

4. Eigenvalues and Eigenvectors

Eigenvalues: λ_1 = 1.3820 λ_2 = 3.6180 Eigenvectors (as columns): [[-0.85065081 -0.52573111] [ 0.52573111 -0.85065081]]

The eigenvalues and eigenvectors of the transformation matrix tell us about the fundamental behavior of the transformation:

- The eigenvalues are approximately λ1≈1.38 and λ2≈3.62

- The corresponding eigenvectors are shown in the output

An eigenvector is a special vector that, when transformed by T, only gets scaled by its corresponding eigenvalue without changing direction.

The second visualization shows these eigenvectors and how they’re affected by the transformation.

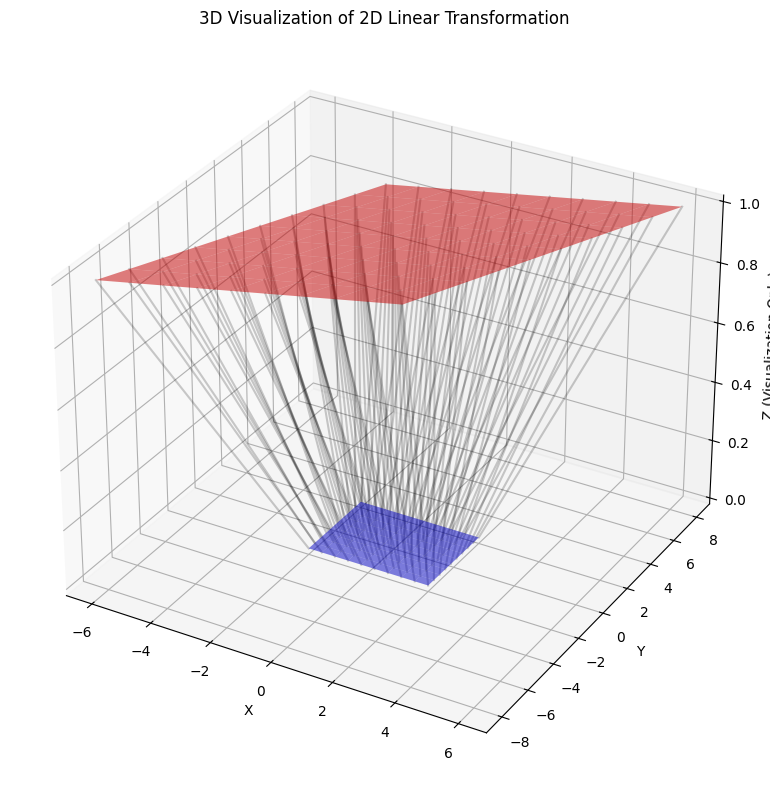

5. 3D Visualization

The final visualization provides a 3D perspective of the transformation, showing how a grid of points in the original space maps to points in the transformed space.

Key Insights

Direction of Maximum Stretching:

The eigenvector corresponding to the larger eigenvalue (λ2≈3.62) indicates the direction in which the transformation stretches vectors the most.Shape Distortion:

The transformation turns the square into a parallelogram, demonstrating how linear transformations preserve straight lines but can alter angles and distances.Area Scaling:

The determinant of the transformation matrix (det(T)=5) tells us that the transformation scales areas by a factor of 5, which you can observe in the increased size of the transformed square.Eigenvector Behavior:

Note how the eigenvectors are only scaled (not rotated) when the transformation is applied.

This property makes eigenvectors particularly useful in understanding linear transformations.

This example demonstrates the fundamental concepts of linear transformations and how we can visualize and analyze them using Python’s numerical and visualization libraries.