Problem Description

In information theory, Shannon entropy quantifies the amount of uncertainty in a probability distribution.

It is given by the formula:

H(X)=−n∑i=1P(xi)log2P(xi)

- P(xi) is the probability of the i-th event.

- H(X) is the entropy in bits.

We will:

- Calculate the Shannon entropy of a discrete probability distribution.

- Visualize how entropy changes with different probability distributions.

Python Solution

1 | import numpy as np |

Explanation of the Code

Shannon Entropy Calculation:

- The function

shannon_entropy()takes a list of probabilities as input. - It ensures the input is valid (probabilities sum to 1 and are non-negative).

- The entropy formula is implemented using (−∑P(x)log2P(x)), with a small offset (1e−10) to handle probabilities of zero.

- The function

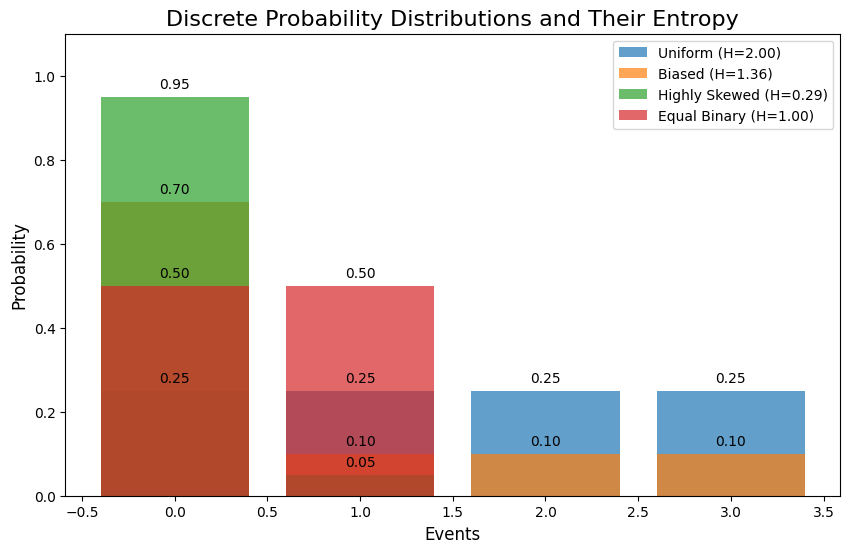

Example Distributions:

- Uniform: All events are equally likely ([0.25,0.25,0.25,0.25]).

- Biased: One event dominates ([0.7,0.1,0.1,0.1]).

- Highly Skewed: One event is almost certain ([0.95,0.05]).

- Equal Binary: Two equally likely events ([0.5,0.5]).

Visualization:

- Each distribution is plotted as a bar chart, with labels showing the probabilities and their corresponding entropies.

Results

Entropy Values:

Entropy of Uniform distribution: 2.0000 bits Entropy of Biased distribution: 1.3568 bits Entropy of Highly Skewed distribution: 0.2864 bits Entropy of Equal Binary distribution: 1.0000 bits

- Uniform Distribution: (H=2.0,bits) (Maximum entropy for 4 events).

- Biased Distribution: (H=1.3568,bits).

- Highly Skewed Distribution: (H=0.2864,bits) (Almost no uncertainty).

- Equal Binary Distribution: (H=1.0,bits).

Graph:

- The bar chart shows the probability distributions for each example.

- Entropy values are displayed in the legend.

Insights

Uniform Distribution:

- Maximizes entropy since all events are equally likely.

- Maximum uncertainty about the outcome.

Biased and Highly Skewed Distributions:

- Lower entropy as probabilities become more uneven.

- Greater certainty about likely outcomes.

Equal Binary Distribution:

- Entropy is 1 bit, which aligns with the classic case of a fair coin toss.

Conclusion

This example illustrates how entropy quantifies uncertainty in probability distributions.

It provides insights into how information theory applies to real-world problems like communication systems, cryptography, and data compression.

The Python implementation and visualization make these concepts clear and accessible.